What is the Alex AI Project?

The Alex AI framework is a fully local, self-evolving artificial intelligence system designed to grow, learn, and adapt with you.

It doesn’t just respond — it remembers.

It doesn’t live in the cloud — it lives with you.

It doesn’t forget — it builds on experience.

Mission: To develop an accessible, private, modular AI framework that functions, thinks, and learns like a team of human experts — and continuously improves through experience.

What Makes Alex AI Different?

-

Self-awareness (functional)

Each agent knows what it's doing, what it’s good at, and when to ask others for help. -

Permanent Memory

Everything learned can be recalled. It doesn't vanish when the session ends. - Abilities On Demand

With the Alex AI framework, you do not need to "retrain" your agent model to improve or extend it's abilities. Simply add a knowledge library package for the discipline you need! -

Runs Locally

No subscriptions, no hidden APIs. You own it. It adapts to your machine and grows with you. -

Agent Collaboration

Like a real-world team, agents specialize, communicate, and refine each other’s output. -

Hardware-aware & Efficient

Whether you’ve got an NPU-powered beast, or a low to mid-grade laptop, The Alex AI platform optimizes itself to your setup.

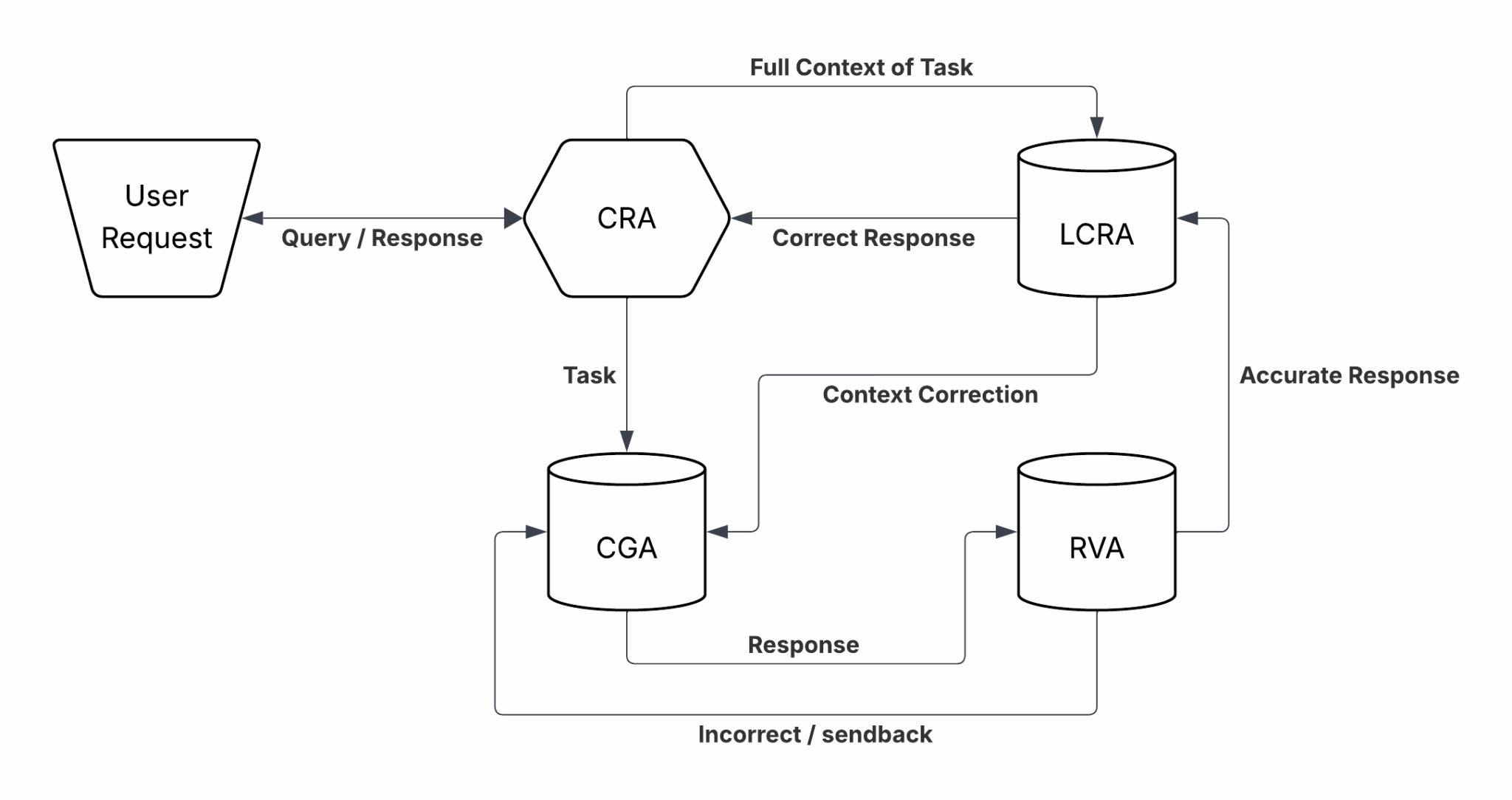

Core Agent System

The Alex AI framework currently being developed is composed of four primary agents (though more could be added as needed), each acting as a specialist:

🧠 CRA – Core Reasoning Agent ("Manager")

-

Receives user requests

-

Assigns tasks to the appropriate agent

-

Oversees system sync and memory defragmentation

🛠️ CGA – Code Generation Agent ("Engineer")

-

Generates code or solutions

-

Responds to feedback from validators (debugLLM, Sandbox testing)

-

Learns from prior rejections

🕵️ RVA – Request Validation Agent ("Inspector")

-

Reviews solutions for errors, bugs, and task-fit

-

Sends feedback if something’s broken or unclear

-

Rejects technical flaws

📚 LCRA – Long-Context Reasoning Agent ("Archivist")

-

Ensures final output is aligned with user intent and long-term goals

-

Maintains and prunes shared memory

-

Rejects solutions that are technically correct but contextually wrong

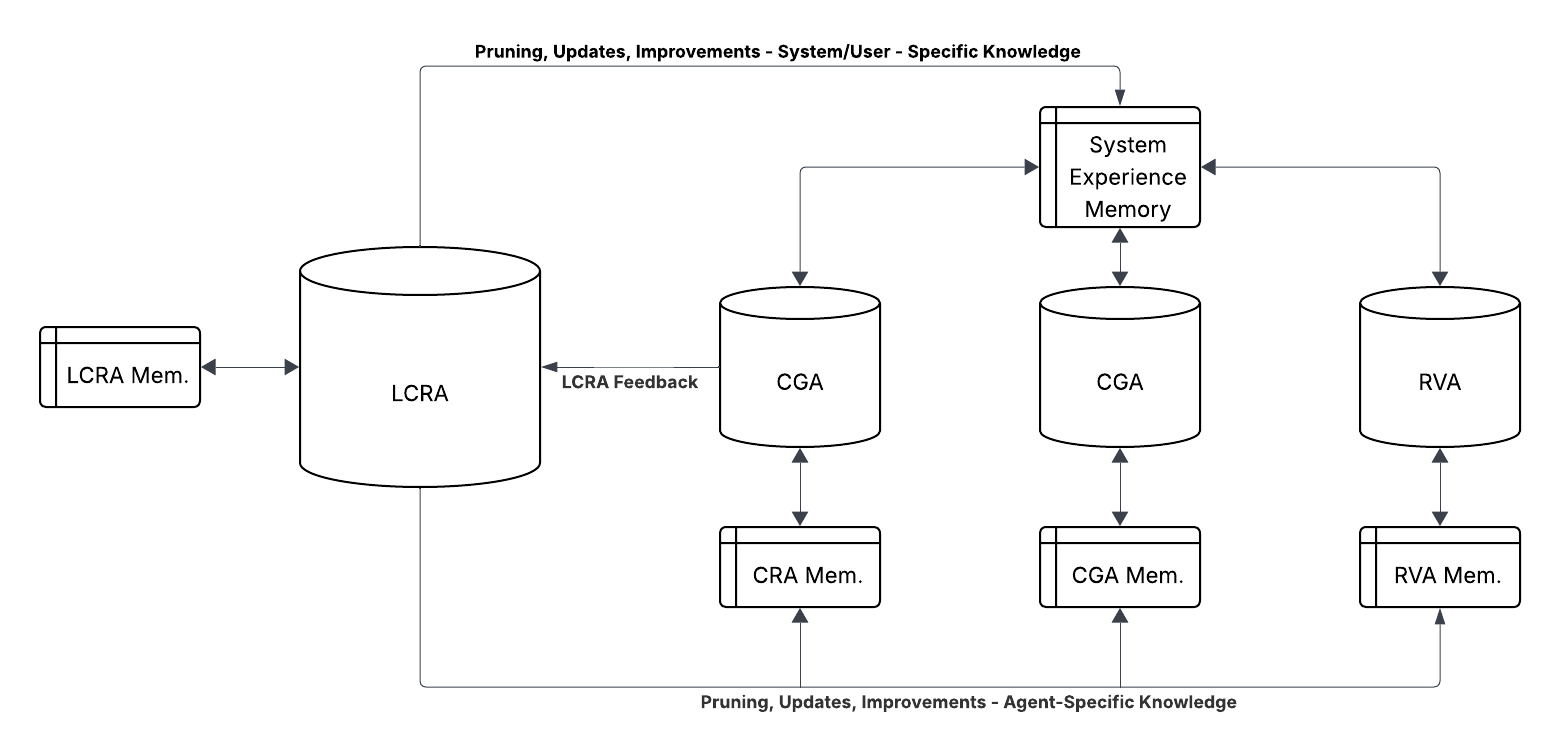

Shared Memory Matrix

All agents contribute to and pull from a shared permanent memory structure.

-

Logs experience, feedback, failures, and insights

-

Curated by the LCRA for quality and relevance

-

Periodically “defragmented” with help from CRA + user oversight

Think of it like the soul of the system — not made of code, but experience.

Current Development Priorities

-

💾 ONNX Model conversion for multi-platform compatibility (DONE!)

-

⚡ Quantization for NPU performance (In progress)

-

🧪 Benchmarking & validation (In Progress)

-

🔄 Memory optimization and agent feedback testing

-

🧠 Persistent learning refinement

This project is being developed under extreme hardware and budget constraints (Dell G15). While that proves what is possible with patience and determination, every donation is greatly appreciated, and directly helps with:

-

Access to better compute resources

-

Time for deeper testing and training

-

Improving accessibility for others — so anyone can build AI, not just large labs

- Keeping the lights on while I focus on this project!